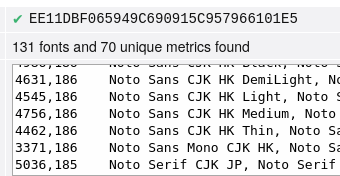

I’ve just been playing around with https://browserleaks.com/fonts . It seems no web browser provides adequate protection for this method of fingerprinting – in both brave and librewolf the tool detects rather unique fonts that I have installed on my system, such as “IBM Plex” and “UD Digi Kyokasho” – almost certainly a unique fingerprint. Tor browser does slightly better as it does not divulge these “weird” fonts. However, it still reveals that the google Noto fonts are installed, which is by far not universal – on a different machine, where no Noto fonts are installed, the tool does not report them.

For extra context: I’ve tested under Linux with native tor browser and flatpak’d Brave and Librewolf.

What can we do to protect ourselves from this method of fingerprinting? And why are all of these privacy-focused browsers vulnerable to it? Is work being done to mitigate this?

A place to discuss privacy and freedom in the digital world.

Privacy has become a very important issue in modern society, with companies and governments constantly abusing their power, more and more people are waking up to the importance of digital privacy.

In this community everyone is welcome to post links and discuss topics related to privacy.

Some Rules

- Posting a link to a website containing tracking isn’t great, if contents of the website are behind a paywall maybe copy them into the post

- Don’t promote proprietary software

- Try to keep things on topic

- If you have a question, please try searching for previous discussions, maybe it has already been answered

- Reposts are fine, but should have at least a couple of weeks in between so that the post can reach a new audience

- Be nice :)

Related communities

much thanks to @gary_host_laptop for the logo design :)

- 0 users online

- 108 users / day

- 435 users / week

- 1.32K users / month

- 4.54K users / 6 months

- 1 subscriber

- 4.65K Posts

- 117K Comments

- Modlog

Out of curiosity, how much of the internet is unusable with js disabled? As in, how often do you run into sites that are essentially non-functional without?

Quite a lot actually. A lot of articles / blogs / news sites are actually more usable without javascript than with, because none of the annoying popups and shit can load. I suggest having two browser profiles: one with javascript enabled by default, and one with javascript disabled. So for things like online shopping, you’d open the js profile. And for things where you expect to do a lot of reading, use the nojs profile. Ublock origin also lets you temporarily enable/disable js for a particular website pretty easily.

Don’t bother https://noscriptfingerprint.com/

There’s also TLS-based fingerprinting which cloudflare uses to great success, no html/css/js even needed for that.

I haven’t taken measurements, but there are many problematic sites these days. Lots of web developers fail to see the problems that javascript imposes on users, so they build web apps even when they’re serving static content, where a regular web site (perhaps with javascript enhancements that aren’t mandatory) would do just fine.

I selectively enable first-party scripts on a handful of sites that I regularly use and mostly trust (or at least tolerate). Many others can be read without scripts using Firefox Reader View. I generally ignore the rest, and look elsewhere for whatever information I’m after.

Thank you for the information! I kind of suspected it’d be like that tbh,