How is that wishful thinking? Open models are advancing just as fast as proprietary ones and they’re now getting much wider usage as well. There are also economic drivers that favor open models even within commercial enterprise. For example, here’s Airbnb CEO saying they prefer using Qwen to OpenAI because it’s more customizable and cheaper

I expect that we’ll see exact same thing happening as we see with Linux based infrastructure muscling out proprietary stuff like Windows servers and Unix. Open models will become foundational building blocks that people build stuff on top of.

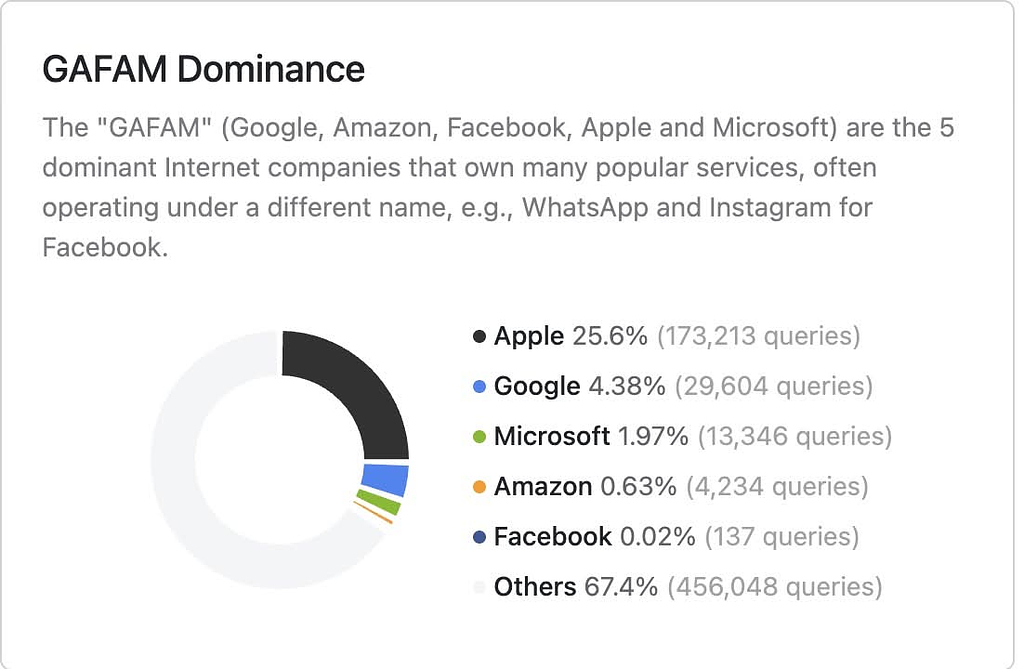

Metadata tracking should be very concerning to anyone who cares about privacy because it inherently builds a social graph. The server operators, or anyone who gets that data, can see a map of who is talking to whom. The content is secure, but the connections are not.

Being able to map out a network of relations is incredibly valuable. An intelligence agency can take the map of connections and overlay it with all the other data they vacuum up from other sources, such as location data, purchase histories, social media activity. If you become a “person of interest” for any reason, they instantly have your entire social circle mapped out.

Worse, the act of seeking out encrypted communication is itself a red flag. It’s a perfect filter: “Show me everyone paranoid enough to use crypto.” You’re basically raising your hand. So, in a twisted way, tools for private conversations that share their metadata with third parties, are perfect machines for mapping associations and identifying targets such as political dissidents.

This is the core of the issue, and it’s wild how many people don’t get it.

Your phone number is metadata. And people who think metadata is “just” data or that cross-referencing is some kind of sci-fi nonsense, are fundamentally misunderstanding how modern surveillance works.

By requiring phone numbers, Signal, despite its good encryption, inherently builds a social graph. The server operators, or anyone who gets that data, can see a map of who is talking to whom. The content is secure, but the connections are not.

Being able to map out who talks to whom is incredibly valuable. A three-letter agency can take the map of connections and overlay it with all the other data they vacuum up from other sources, such as location data, purchase histories, social media activity. If you become a “person of interest” for any reason, they instantly have your entire social circle mapped out.

Worse, the act of seeking out encrypted communication is itself a red flag. It’s a perfect filter: “Show me everyone paranoid enough to use crypto.” You’re basically raising your hand.

So, in a twisted way, Signal being a tool for private conversations, makes it a perfect machine for mapping associations and identifying targets. The fact that it operates using a centralized server located in the US should worry people far more than it seems to.

The kicker is that thanks to gag orders, companies are legally forbidden from telling you if the feds come knocking for this data. So even if Signal’s intentions are pure, we’d never know how the data it collects is being used. The potential for abuse is baked right into the phone-number requirement.

- •

- 3M

- •

EU quietly funded a “Thought Surveillance” project that scores citizens for ‘radicalization’ using L

Radicle is really worth checking out https://radicle.xyz/

Oh yeah the whole thing is a mess. It kind of blows my mind that we still don’t have a single common protocol that at least the open source world agrees on. Like there is a more or less fixed set of things chat apps need to do, we should be able to agree on something akin to ActivityPub here as a base.

The explanation is obvious. The phone numbers are a personally identifiable network of connections that is available to the people operating Signal servers. If this information is shared with the US government, then they can easily correlate this information with all the other data they have. For example, if somebody is identified as a person of interest then anybody they want to have secure communications would also be of interest.

I don’t think they actually understand that Mastodon is a network in a traditional sense that works the way the internet was meant to operate before the corporate takeover. People have been so conditioned that the internet is just 5 corps in a trench coat, that they don’t have the cognitive tools to engage with something like the fediverse.

No, it’s because lemmy.ml doesn’t tolerate racism the way you fash instance does.

The point is that I, and many other people, have answered these questions many times. If you’re personally ignorant on the subject, then spend the time to educate yourself. You can start with the materials I’ve provided you. It’s not my job to educate you. I perfect having interactive discussion with people who understand the subject they’re discussing and want to have a discussion in good faith. It’s very transparent that you are not.

I’ll let you have the last word here which you so desperately need.

Bye.

I just love how you keep acting like these questions haven’t been answered time and again. As if you came up with some novel line of questioning nobody has ever thought before. Go read a book for once in your life. Here’s one you can start with. https://welshundergroundnetwork.cymru/wp-content/uploads/2020/04/blackshirts-and-reds-by-michael-parenti.pdf

And here’s how people who actually live in China characterize their modern government in one or two words. If you spent as much time educating yourself on the subjects you wish to debate instead of making a clown of yourself in public, you wouldn’t have to ask questions like this and em brass yourself.

- https://www.newsweek.com/most-china-call-their-nation-democracy-most-us-say-america-isnt-1711176

- https://www.csmonitor.com/World/Asia-Pacific/2021/0218/Vilified-abroad-popular-at-home-China-s-Communist-Party-at-100

- https://www.bloomberg.com/opinion/articles/2020-06-26/which-nations-are-democracies-some-citizens-might-disagree

- https://web.archive.org/web/20230511041927/https://6389062.fs1.hubspotusercontent-na1.net/hubfs/6389062/Canva images/Democracy Perception Index 2023.pdf

- https://www.tbsnews.net/world/china-more-democratic-america-say-people-98686

- https://web.archive.org/web/20201229132410/https://en.news-front.info/2020/06/27/studies-have-shown-that-china-is-more-democratic-than-the-united-states-russia-is-nearby-and-ukraine-is-at-the-bottom/

You’re like a living embodiment of the Dunning-Kruger effect.

I’m actually building LoRAs for a project right now, and found that qwen3-8b-base is the most flexible model for that. The instruct is already biased for prompting and agreeing, but the base model is where it’s at.