Arthur Besse

cultural reviewer and dabbler in stylistic premonitions

- 14 Posts

- 111 Comments

reposting my comment from the thread yesterday:

reposting my comment in a thread last month about this:

in b4 haveibeenhaveibeenflocked.

they have a list of their current collection of 239 .csv files but sadly don’t appear to let you actually download them to query offline

they now have 519 sources, some of which are downloadable from muckrock but many aren’t.

i still don’t understand why this website isn’t open source and open data, and i strongly recommend thinking carefully about it (eg, thinking about if you’d mind if the existence of your query becomes known to police and/or the public) before deciding if you want to type a given plate number in to it.

also, reposting my comment in a thread last month about this:

in b4 haveibeenhaveibeenflocked.

they have a list of their current collection of 239 .csv files but sadly don’t appear to let you actually download them to query offline

they now have 519 sources, some of which are downloadable from muckrock but many aren’t.

i still don’t understand why this website isn’t open source and open data, and i strongly recommend thinking carefully about it (eg, thinking about if you’d mind if the existence of your query becomes known to police and/or the public) before deciding if you want to type a given plate number in to it.

- •

- lemmy.ml

- •

- 17d

- •

Just… don’t connect them to the internet? Or if you must connect them for dumb shit like system updates, put them behind some access control where the only access they have is the server they get updates from.

I regret to inform you that preventing devices from getting online is getting more difficult: three years ago Amazon began allowing other companies’ products to use their BLE-and-LoRa-based mesh network to get online via your neighbors’ internet-connected devices.

But you can turn off sealed sender messages from anyone, so they’d have to already be a trusted contact

The setting to mitigate this attack (so that only people who know your username can do it, instead of anybody who knows your number) is called Who Can Find Me By Number. According to the docs, setting it to nobody requires also setting Who Can See My Number to nobody. Those two settings are both entirely unrelated to Signal’s “sealed sender” thing, which incidentally is itself cryptography theater, btw.

Anyone can track WhatsApp and Signal users’ activity, knowing only their phone number: "Careless Whi

If a payment processor implemented this (or some other anonymous payment protocol), and customers paid them on their website instead of on the website of the company selling the phone number, yeah, it could make sense.

But that is not what is happening here: I clicked through on phreeli’s website and they’re loading Stripe js on their own site for credit cards and evidently using their own self-hosted thing for accepting a hilariously large number of cryptocurrencies (though all of the handful of common ones i tried yielded various errors rather than a payment address).

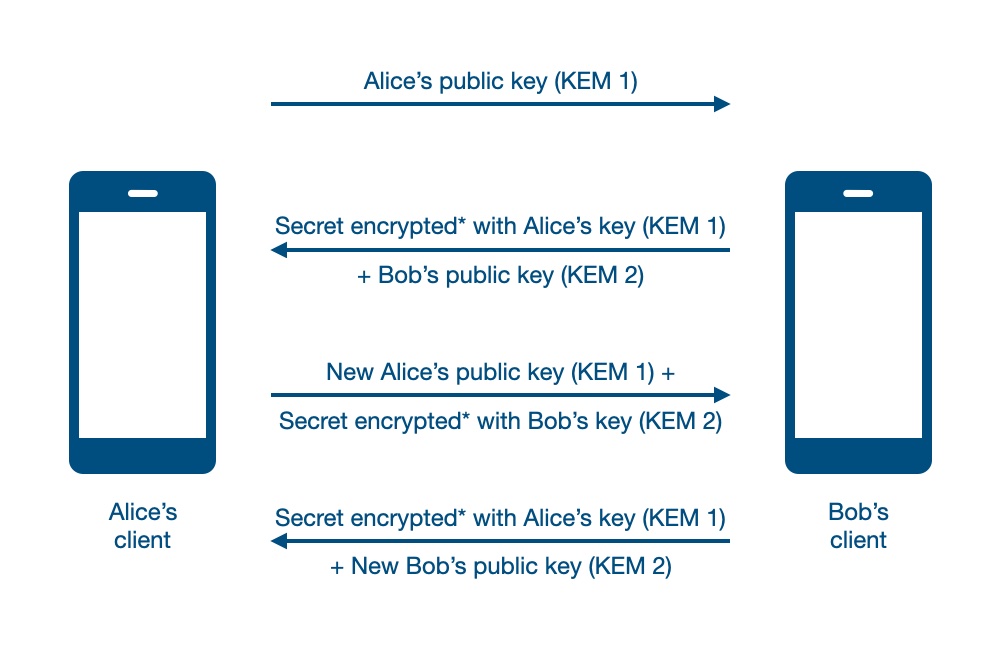

So like, it’s a situation where the “lock” has 2 keys, one that locks it and one that unlocks it

Precisely :) This is called asymmetric encryption, see https://en.wikipedia.org/wiki/Public-key_cryptography to learn more, or read on for a simple example.

I thought if you encrypt something with a key, you could basically “do it backwards” to get the original information

That is how it works in symmetric encryption.

In many real-world applications, a combination of the two is used: asymmetric encryption is used to encrypt - or to agree upon - a symmetric key which is used for encrypting the actual data.

Here is a simplified version of the Diffie–Hellman key exchange (which is an asymmetric encryption system which can be used to agree on a symmetric key while communicating over a non-confidential communication medium) using small numbers to help you wrap your head around the relationship between public and private keys. The only math you need to do to be able to reproduce this example on paper is exponentiation (which is just repeated multiplication).

Here is the setup:

- There is a base number which everyone uses (its part of the protocol), we’ll call it

gand say it’s 2 - Alice picks a secret key

awhich we’ll say is 3. Alice’s public keyAis ga (23, or2*2*2) which is 8 - Bob picks a secret key

bwhich we’ll say is 4. Bob’s public keyBis gb (24, or2*2*2*2) which is 16 - Alice and Bob publish their public keys.

Now, using the other’s public key and their own private key, both Alice and Bob can arrive at a shared secret by using the fact that Ba is equal to Ab (because (ga)b is equal to g(ab), which due to multiplication being commutative is also equal to g(ba)).

So:

- Alice raises Bob’s public key to the power of her private key (163, or

16*16*16) and gets 4096 - Bob raises Alices’s public key to the power of his private key (84, or

8*8*8*8) and gets 4096

The result, which the two parties arrived at via different calculations, is the “shared secret” which can be used as a symmetric key to encrypt messages using some symmetric encryption system.

You can try this with other values for g, a, and b and confirm that Alice and Bob will always arrive at the same shared secret result.

Going from the above example to actually-useful cryptography requires a bit of less-simple math, but in summary:

To break this system and learn the shared secret, an adversary would want to learn the private key for one of the parties. To do this, they can simply undo the exponentiation: find the logarithm. With these small numbers, this is not difficult at all: knowing the base (2) and Alice’s public key (8) it is easy to compute the base-2 log of 8 and learn that a is 3.

The difficulty of computing the logarithm is the difficulty of breaking this system.

It turns out you can do arithmetic in a cyclic group (a concept which actually everyone has encountered from the way that we keep time - you’re performing mod 12 when you add 2 hours to 11pm and get 1am). A logarithm in a cyclic group is called a discrete logarithm, and finding it is a computationally hard problem. This means that (when using sufficiently large numbers for the keys and size of the cyclic group) this system can actually be secure. (However, it will break if/when someone builds a big enough quantum computer to run this algorithm…)

Much respect to Nick for fighting for eleven years against the gag order he received, but i’m disappointed that he is now selling this service with cryptography theater privacy features.

Can someone with experience doing ZK Proofs please poke holes in this design?

One doesn’t need to know about zero-knowledge proofs to poke holes in this design.

Just read their whitepaper:

You can read the whole thing here but I’ll quote the important part: (emphasis mine)

Double-Blind Armadillo (aka Double Privacy Pass with Commitments) is a privacy-focused system architecture and cryptographic protocol designed around the principle that no single party should be able to link an individual’s real identity, payments, and phone records. Customers should be able to access services, manage payments, and make calls without having their activity tracked across systems. The system achieves this by partitioning critical information related to customer identities, payments, and phone usage into separate service components that communicate only through carefully controlled channels. Each component knows only the information necessary to perform its function and nothing more. For example, the payment service never learns which phone number belongs to a person, and the phone service never learns their name.

Note that parties (as in “no single party”) here are synonymous with service components.

So, if we assume that all of the cryptography does what it says it does, how would an attacker break this system?

By compromising (or simply controlling in the first place) more than one service component.

And:

I don’t see any claim that any of the service components are actually run by independent entities. And, even if they were supposedly run by different people, for the privacy of this system to stop being dependent on a single company behind it doing what they say they’re doing, there would also need to be some cryptographic mechanism for customers to verify that the independent entities supposedly operating different parts were in fact doing so.

In conclusion, yes, this is mostly cryptography-washing. Assuming good intentions (eg not being compromised from the start), the cryptographic system here would make it slightly more work for them to become compromised but does not really prevent anything.

The primary thing accomplished by cryptography here over just having a simple understandable “we don’t record the link between payment info and phone numbers, but you’ll just have to trust us on that” policy is to give potential customers a (false) sense of security.

SMS can have end to end encryption

in theory it can, but in practice i’m not aware of any software anyone uses today which does that. (are you? which?)

TextSecure, the predecessor to Signal, did actually originally use SMS to transport OTR-encrypted messages, but it stopped doing that and switched to requiring a data connection and using Amazon Web Services as an intermediary long ago (before it was merged with their calling app RedPhone and renamed to Signal).

edit: i forgot, there was also an SMS-encrypting fork of TextSecure called SMSSecure, later renamed Silence. It hasn’t been updated in 5 (on github) or 6 (on f-droid) years but maybe it still works? 🤷

a summary can be helpfull

No. LLMs can’t reliably summarize without inserting made-up things, which your now-deleted comment (which can still be read in the modlog here) is a great example of. I’m not going to waste my time reading the whole thing to see how much is right or wrong but it literally fabricated a nonexistent URL 😂

Please don’t ever post an LLM summary again.

If they don’t use a bank, how are they pulling money out for it to be tracked?

One example I mentioned in my comment you’re replying to is check cashing services. Millions of people in the US receive money via things like check or money order and need to change it to cash despite not having a bank account to deposit it in; this usually involves identifying themselves.

See also payday loans, etc.

See, none of it makes any sense lmfao.

I assume you didn’t click (and translate) the link in the comment prior to mine which you replied to?

If you do, from there you can find some industry news about Serial Number Reading (SNR) technology.

I don’t know how widely deployed that technology is, but there is clear evidence that it does exist and is used for various purposes.

I ONLY give other people cash, all my other purchases are debit/credit.

If you always use card payments whenever it’s possible, it obviously isn’t necessary to analyze your cash transactions to learn where you are because you are already disclosing it :)

Like MOST people and stores since Covid

There are close to 2 billion unbanked people in the world. In the US, it’s less than 6% nationally, but over 10% in some states.

Many people who are not unbanked also often avoid electronic payments for privacy/security and other reasons.

The cash serial number tracking being described in this thread is useful for locating the neighborhoods frequented by someone who (a) avoids using electronic payments, and (b) maybe obtains cash from an ATM (or perhaps check-cashing service, in the case of an unbanked person) in places other than the neighborhoods they live in or frequent.

At launch (in 2021) the FireTV was not on the list of Sidewalk-enabled products, but given the fact that Sidewalk was enabled without user consent on many existing devices (and has been found to re-enable itself after being disabled) combined with the fact that FireTV devices all have at least the necessary bluetooth radio (even if not the LoRA part, Sidewalk can use both/either) and thus could become sidewalk-enabled by a software update in the future… I would still say that Sidewalk is a reason (among many) to boycott FireTV along with the rest of Amazon’s products.

The takeaway that Amazon built their own mesh network so that their products in neighboring homes can exfiltrate data via eachother whenever any one of them can get online is not false.

Social graph connections can be automatically inferred from location data. This has been done by governments (example) for a long time and is also done by private companies (sorry I can’t find a link at the moment).

The text of the new Texas law is here.

I wonder if this will apply to/be enforced on FDroid and Obtainium?

copying my comment from another thread:

“App store” means a publicly available Internet website, software application, or other electronic service that distributes software applications from the owner or developer of a software application to the user of a mobile device.

This sounds like it could apply not only to F-Droid but also to any website distributing APKs, and actually, every other software distribution sysem too (eg, linux distros…) which include software which could be run on a “mobile device” (the definition of which also can be read as including a laptop).

otoh i think they might have made a mistake and left a loophole; all of the requirements seem to depend on an age verification “under Section 121.021” and Section 121.021 says:

When an individual in this state creates an account with an app store, the owner of the app store shall use a commercially reasonable method of verification to verify the individual’s age category

I’m not a lawyer but I don’t see how this imposes any requirements on “app stores” which simply don’t have any account mechanism to begin with :)

(Not to say that this isn’t still immediately super harmful for the majority of the people who get their apps from Google and Apple…)

you have posted only two comments on lemmy so far, and both are telling people to buy this phone. do you have any affiliation with it, and/or are you planning to continue using your lemmy account solely to encourage people to buy it?

also, since you seem to know about this, i am curious if you can enlighten me: are there any benefits of iodéOS compared with LineageOS which it is a derivative of? i didn’t find a comparison between them on the website.

Makes me curious as to what happened here

You can see the deleted comments in the modlog. There is also a thread for discussing the deletions in this thread here.

they were suggesting a solution, this proof-of-work web firewall: https://github.com/TecharoHQ/anubis

For chat, something with e2ee and without phone numbers or centralized metadata. SimpleX, Matrix, XMPP, etc - each have their own problems, but at least they aren’t centralizing everyone’s metadata with a CIA contractor like Jeff Bezos like Signal is.

For email, I’d recommend finding small-to-medium-sized operators who seem both honest and competent. Anyone offering snakeoil privacy features such as browser-based e2ee is failing in at least one of those two categories.

No, it isn’t about hiding your identity from the people you send messages to - it’s about the server (and anyone with access to it) knowing who communicates with who, and when.

Michael Hayden (former director of both the NSA and CIA) famously acknowledged that they literally “kill people based on metadata”; from Snowden disclosures we know that they share this type of data with even 3rd-tier partner countries when it is politically beneficial.

Signal has long claimed that they don’t record such metadata, but, since they outsource the keeping of their promises to Amazon, they decided they needed to make a stronger claim so they now claim that they can’t record it because the sender is encrypted (so only the recipient knows who sent it). But, since they must know your IP anyway, from which you need to authenticate to receive messages, this is clearly security theater: Amazon (and any intelligence agency who can compel them, or compel an employee of theirs) can still trivially infer this metadata.

This would be less damaging if it was easy to have multiple Signal identities, but due to their insistence on requiring a phone number (which you no longer need to share with your contacts but must still share with the Amazon-hosted Signal server) most people have only one account which is strongly linked to many other facets of their online life.

Though few things make any attempt to protect metadata, anything without the phone number requirement is better than Signal. And Signal’s dishonest incoherent-threat-model-having “sealed sender” is a gigantic red flag.

more important than expecting ip obfuscation or sealed sender from signal

People are only expecting metadata protection (which is what “sealed sender”, a term Signal themselves created, purports to do) because Signal dishonestly says they are providing it. The fact that they implemented this feature in their protocol is one of the reasons they should be distrusted.

machine translation of a paragraph of the original article:

The police’s solution: It’s none other than a Trojan. Unable to break the encryption, they infect the traffickers’ phones with malware, subject to judicial authorization. This way, they gain full access to the device: apps, images, documents, and conversations. Obviously, GrapheneOS isn’t capable of protecting itself (like any Android) against this malware.

original text in Castilian

La solución de la policía. Esa no es otra que un troyano. Ante la imposibilidad de romper el cifrado, infectan los teléfonos de los traficantes con software malicioso, previa autorización judicial. De esta manera, consiguen acceso total al dispositivo: apps, imágenes, documentos y conversaciones. Evidentemente, GrapheneOS no es capaz de protegerse (como cualquier Android) ante este malware.

🤔

in other news, the market price of hacked credentials for MAGA-friendly social media accounts:

📈

note

in case it is unclear to anyone: the above is a joke.

in all seriousness, renaming someone else’s account and presenting it to CBP as one’s own would be dangerous and inadvisable. a more prudent course of action at this time is to avoid traveling to the united states.

were you careful to be sure to get the parts that have the key’s name and email address?

It should be if there is chunks missing its unusable. At least thats my thinking, since gpg is usually a binary and ascii armor makes it human readable. As long as a person cannot guess the blacked out parts, there shouldnt be any data.

you are mistaken. A PGP key is a binary structure which includes the metadata. PGP’s “ascii-armor” means base64-encoding that binary structure (and putting the BEGIN and END header lines around it). One can decode fragments of a base64-encoded string without having the whole thing. To confirm this, you can use a tool like xxd (or hexdump) - try pasting half of your ascii-armored key in to base64 -d | xxd (and hit enter and ctrl-D to terminate the input) and you will see the binary structure as hex and ascii - including the key metadata. i think either half will do, as PGP keys typically have their metadata in there at least twice.

TLDR: this is way more broken than I initially realized

To clarify a few things:

-No JavaScript is sent after the file metadata is submitted

So, when i wrote “downloaders send the filename to the server prior to the server sending them the javascript” in my first comment, I hadn’t looked closely enough - I had just uploaded a file and saw that the download link included the filename in the query part of the URL (the part between the ? and the #). This is the first thing that a user sends when downloading, before the server serves the javascript, so, the server clearly can decide to serve malicious javascript or not based on the filename (as well as the user’s IP).

However, looking again now, I see it is actually much worse - you are sending the password in the URL query too! So, there is no need to ever serve malicious javascript because currently the password is always being sent to the server.

As I said before, the way other similar sites do this is by including the key in the URL fragment which is not sent to the server (unless the javascript decides to send it). I stopped reading when I saw the filename was sent to the server and didn’t realize you were actually including the password as a query parameter too!

😱

The rest of this reply was written when I was under the mistaken assumption that the user needed to type in the password.

That’s a fundamental limitation of browser-delivered JavaScript, and I fully acknowledge it.

Do you acknowledge it anywhere other than in your reply to me here?

This post encouraging people to rely on your service says “That means even I, the creator, can’t decrypt or access the files.” To acknowledge the limitations of browser-based e2ee I think you would actually need to say something like “That means even I, the creator, can’t decrypt or access the files (unless I serve a modified version of the code to some users sometimes, which I technically could very easily do and it is extremely unlikely that it would ever be detected because there is no mechanism in browsers to ensure that the javascript people are running is always the same code that auditors could/would ever audit).”

The text on your website also does not acknowledge the flawed paradigm in any way.

This page says "Even if someone compromised the server, they’d find only encrypted files with no keys attached — which makes the data unreadable and meaningless to attackers. To acknowledge the problem here this sentence would need to say approximately the same as what I posted above, except replacing “unless I serve” with “unless the person who compromised it serves”. That page goes on to say that “Journalists and whistleblowers sharing sensitive information securely” are among the people who this service is intended for.

The server still being able to serve malicious JS is a valid and well-known concern.

Do you think it is actually well understood by most people who would consider relying on the confidentiality provided by your service?

Again, I’m sorry to be discouraging here, but: I think you should drastically re-frame what you’re offering to inform people that it is best-effort and the confidentiality provided is not actually something to be relied upon alone. The front page currently says it offers “End-to-end encryption for complete security”. If someone wants/needs to encrypt files so that a website operator cannot see the contents, then doing so using software ephemerally delivered from that same website is not sufficient: they should encrypt the file first using a non-web-based tool.

update: actually you should take the site down, at least until you make it stop sending the key to the server.

Btw, DeadDrop was the original name of Aaron Swartz’ software which later became SecureDrop.

it’s zero-knowledge encryption. That means even I, the creator, can’t decrypt or access the files.

I’m sorry to say… this is not quite true. You (or your web host, or a MITM adversary in possession of certificate authority key) can replace the source code at any time - and can do so on a per-user basis, targeting specific IP addresses - to make it exfiltrate the secret key from the uploader or downloader.

Anyone can audit the code you’ve published, but it is very difficult to be sure that the code one has audited is the same as the code that is being run each time one is using someone else’s website.

This website has a rather harsh description of the problem: https://www.devever.net/~hl/webcrypto … which concludes that all web-based cryptography like this is fundamentally snake oil.

Aside from the entire paradigm of doing end-to-end encryption using javascript that is re-delivered by a webserver at each use being fundamentally flawed, there are a few other problems with your design:

- allowing users to choose a password and using it as the key means that most users’ keys can be easily brute-forced. (Since users need to copy+paste a URL anyway, it would make more sense to require them to transmit a high-entropy key along with it.)

- the filenames are visible to the server

- downloaders send the filename to the server prior to the server sending them the javascript which prompts for the password and decrypts the file. this means you have the ability to target maliciously modified versions of the javascript not only by IP but also by filename.

There are many similar browser-based things which still have the problem of being browser-based but which do not have these three problems: they store the file under a random identifier (or a hash of the ciphertext), and include a high-entropy key in the “fragment” part of the URL (the part after the # symbol) which is by default not sent to the server but is readable by the javascript. (Note that the javascript still can send the fragment to the server, however… it’s just that by default the browser does not.)

I hope this assessment is not too discouraging, and I wish you well on your programming journey!

When it’s libre software, we’re not banned from fixing it.

Signal is a company and a network service and a protocol and some libre software.

Anyone can modify the client software (though you can’t actually distribute modified versions via Apple’s iOS App Store, for reasons explained below) but if a 3rd party actually “fixed” the problems I’ve been talking about here then it really wouldn’t make any sense to call that Signal anymore because it would be a different (and incompatible) protocol.

Only Signal (the company) can approve of changes to Signal (the protocol and service).

Here is why forks of Signal for iOS, like most seemingly-GPLv3 software for iOS, cannot be distributed via the App Store

Apple does not distribute GPLv3-licensed binaries of iOS software. When they distribute binaries compiled from GPLv3-licensed source code, it is because they have received another license to distribute those binaries from the copyright holder(s).

The reason Apple does not distribute GPLv3-licensed binaries for iOS is because they cannot, because the way that iOS works inherently violates the “installation information” (aka anti-tivozation) clause of GPLv3: Apple requires users to agree to additional terms before they can run a modified version of a program, which is precisely what this clause of GPLv3 prohibits.

This is why, unlike the Android version of Signal, there are no forks of Signal for iOS.

The way to have the source code for an iOS program be GPLv3 licensed and actually be meaningfully forkable is to have a license exception like nextcloud/ios/COPYING.iOS. So far, at least, this allows Apple to distribute (non-GPLv3!) binaries of any future modified versions of the software which anyone might make. (Legal interpretations could change though, so, it is probably safer to pick a non-GPLv3 license if you’re starting a new iOS project and have a choice of licenses.)

Anyway, the reason Signal for iOS is GPLv3 and they do not do what NextCloud does here is because they only want to appear to be free/libre software - they do not actually want people to fork their software.

Only Signal (the company) is allowed to give Apple permission to distribute binaries to users. The rest of us have a GPLv3 license for the source code, but that does not let us distribute binaries to users via the distribution channel where nearly all iOS users get their software.

Downvoted as you let them bait you. Escaping WhatsApp and Discord, anti-libre software, is more important.

I don’t know what you mean by “bait” here, but…

Escaping to a phone-number-requiring, centralized-on-Amazon, closed-source-server-having, marketed-to-activists, built-with-funding-from-Radio-Free-Asia (for the specific purpose of being used by people opposing governments which the US considers adversaries) service which makes downright dishonest claims of having a cryptographically-ensured inability to collect metadata? No thanks.

(fuck whatsapp and discord too, of course.)

- •

- edri.org

- •

- 3Y

- •