https://www.404media.co/revealed-the-country-that-secretly-wiretapped-the-world-for-the-fbi/

It’s already behind a paywall. But it was really a sting operation, using fake “secure” phones, to catch criminals, by skirting constitutional requirements.

I personally think you have to be careful. If they don’t like your application and find that you are not disclosing the information, it might become a justification to reject the application. Remember that there are 3rd parties that massively correlate internet data that are sold to governments and corporations. Unless you accounts definitely cannot be linked to your real identity, there is a chance that they will find out what social accounts you have anyway.

This article specifically addresses Visa applications. So, if the person is already applying for a citizenship, there is most likely already a residency which doesn’t require Visa on entry. There also seems to be a different set of rules for people already in the country. From the article:

And while the court recognized the First Amendment rights of noncitizens currently present in the United States who limit their online speech because they may need to renew a visa in the future, it held that the federal government’s regulation of immigration should be granted significant deference.

Also use HTTPS for DNS, e.g. private DNS for Android. https://adguard-dns.io/en/public-dns.html

With airlines, it still seems to be voluntary and justified by convenience. (https://www.businessinsider.com/american-airlines-facial-recognition-boarding-dfw-aviation-trend-2019-8)

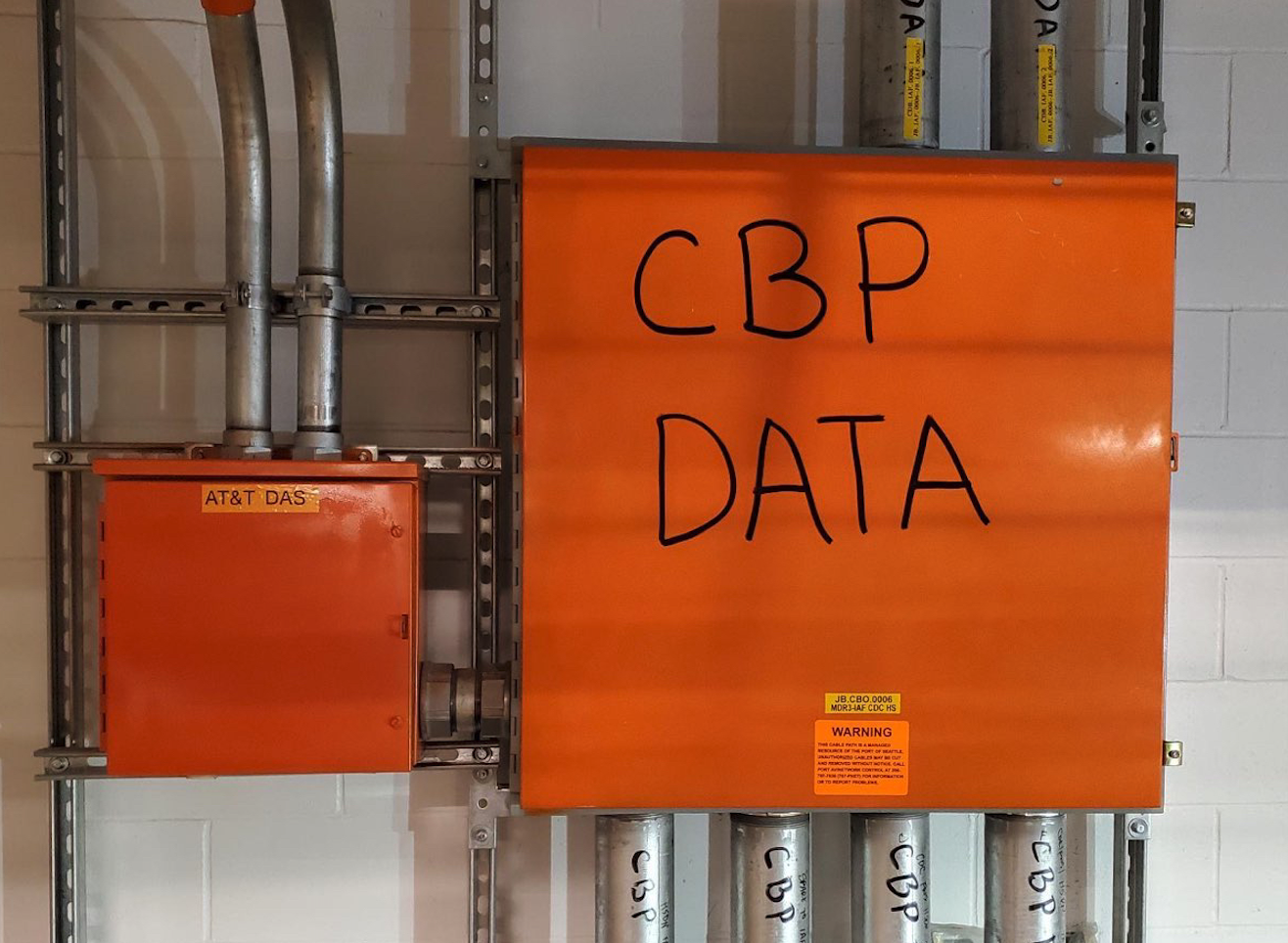

For boarding-crossing trip related to CBP, it seems you can still explicitly opt-out, possibly incurring inconveniences. But it maybe less voluntary in the long run (also because of inconveniences.)

For foreigners needing to travel in-and-out of the US, they will always be more willing to submit to the whims of Airport/US authorities.

They’re trying to push it up to 97% compliance, apparently mandated by Congress. So, generally, don’t cross the US border. Here’s an article from EFF: https://www.eff.org/deeplinks/2017/08/end-biometric-border-screening

DHS recently took the alarming position that “the only way for an individual to ensure he or she is not subject to collection of biometric information when traveling internationally is to refrain from traveling.”

Try 2FAS. Open-sourced. Also works on Android. Has a browser extension that allows automatic 2FA entry paired with a phone.

OTH, if you need a Windows client, then Authy may be the way to go. Need to religiously copy the TOTP secret (when setting up) and save it somewhere else, though. Because it doesn’t officially allow export, it might be a bitch to move to other authenticators.

- Claire Woodall-Vogg, the executive director of the Milwaukee Election Commission, was harassed and threatened after an innocuous email exchange with an election consultant was published by conservative news outlets.

- One of the harassers used ProtonMail, an encrypted email service, to send Woodall-Vogg a threatening email.

- The FBI acquired data from Proton Technologies, the owner of ProtonMail, to help them identify the anonymous emailer.

- The FBI was able to find the suspect’s identity and conduct a sweep across their internet accounts, but they were not charged with any crimes.

- Woodall-Vogg said that the harassment has not continued recently.

- ProtonMail said that they employ several teams to handle instances of abuse on their platform and that they only provide metadata to law enforcement agencies.

- ProtonMail has received 6,995 orders for data in 2022, of which it contested 1,038.

They said in the past that to retain anonymity, users should use Tor to access the service.

Also beware that for any web/app client that auto-retrieves the image links in a post/comment/message, the other person can put a tracker that can retrieve your IP address, and possibly your browser/other info as well. VPN/Tor would prevent this.

It’s like your email client not retrieving the images automatically to prevent the spammers to get any info about your interactions with the spam emails.

Since I am not in anyway inclined to go read their code, I probably will just trust FF’s “recommended” flag until there is an obvious problem. Of course, when it is like that, then it’s too late. I tried the “Dark theme” on FF for a little bit, switch back to using Dark Reader in no time.