The worst kind of an Internet-herpaderp. Internet-urpo pahimmasta päästä.

- 0 Posts

- 24 Comments

got the first game/shareware on some random “bajillion games collection!” cd when I was a kid. Even if it wasn’t a full game, the amount of game the shareware episode had was staggering. Years later I came across the second part which was released as freeware. There was much rejoicement.

The game sure has it’s BS moments - like nearly impossible dragons/whatever enemies which occasionally just murder you, but dangit I like it. :3

Just got to figure out a way to “double the pixel size” so that all those crusty graphics don’t get too small on my screen, dosbox + win3.1 is nice but… could be better! :P

TBH, I kinda do prefer to run the game on Win 3.1 I have installed in my dosbox (and neatly transport the whole “C:” around my systems with Dropbox sync :D), as the UI of CoTW gets kinda too small without pixel doubling/tripling - and afaik that’s one thing Wine doesn’t do.

Sidenote: BTW I have looked what the game Castle of the Winds is about. Man its from Epic. This company was such a cool company back then.

published by Epic, but not developed by them. Anyhoo, they were cool once upon a time. :)

edit: apart from nostalgia trip, I would say you don’t need Win 3.x if you already have Win98 set up in dosbox - should run all 16bit windows apps from stone age just fine as is.

Wine is kinda magical. I really like this old 16bit Windows 3.x game “Castle of The Winds” (topdown, turn based, roguelike) which doesn’t run on any 64bit windows as is (though, you CAN, with https://github.com/otya128/winevdm, but iirc it was a bit finicky).

Wine runs CoTW fine, seemingly.

I just started watching the video and… I do agree, but worth adressing, isn’t it? Majority of desktop computers do have nvidia gpu after all.

I do wish nvidia would get their shit together, I am dualbooting with win10 and arch (kde/wayland/nvidia), with preference on arch. But man, when their shit breaks it’s dire. My work laptop with amdgpu works so much better… but I can’t game on that system, can I x) (edit: also, shhh, SHHHHHH. games work, but I shouldn’t)

edit: done watching, as far as I can tell (caveat: I am slightly beer’d up) nvidia issues didn’t come up. Not that they’re not a thing, … are we commenting on the same video?

the bin and cue files are a cd/dvd image. IIRC you can’t mount those directly, but you can convert them to iso with bin2iso (there are probably other tools too)

iso file you can mount something like mount -o loop /path/to/my-iso-image.iso /mnt/iso and then pull the files out from there.

As for directly pulling files out from bin/cue… dunno.

Running Galaxy with proton-ge. Sure, it doesn’t install linux versions of games or anything, but it works.

Basically what I did was:

- run arch btw, obviously and loaded with sarcasm, as always

- install https://aur.archlinux.org/packages/proton-ge-custom-bin

- aquired galaxy installer (GOG’s site hides download links on linux… why???)

proton gog-galaxy-installer.exeto install. It installs to~/.local/share/proton-pfx/0/pfx/drive_c/Program Files/GOG Galaxy(or somesuch)- I made a shortcut to launch the galaxy.exe with proton from the directory & using the directory as working directory

- profit.

Seems to work fine, some older version of proton-ge and/or nvidia driver under wayland made the client bit sluggish, but that has fixed itself. Games like Cyberpunk work fine. The galaxy overlay doesn’t, though.

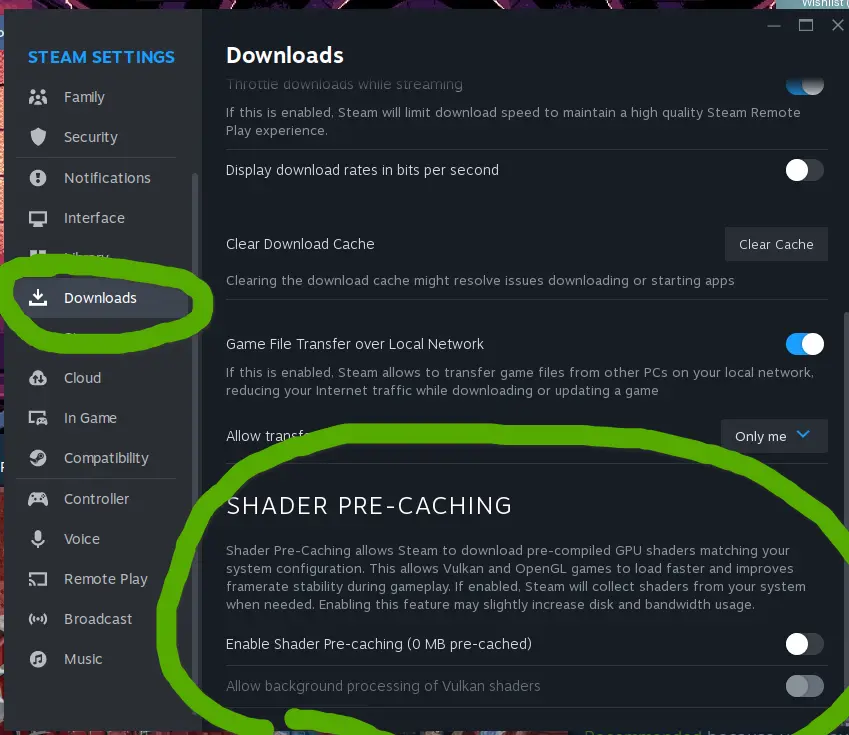

yep, I’m aware. I just haven’t observed* any compilation stutters - so in that sense I’d rather keep it off and save the few minutes (give or take) on launch

*Now, I’m sure the stutters are there and/or the games I’ve recently played on linux haven’t been susceptible to them, but the tradeoff is worth it for me either way.

well, I do have this one game I’ve tried to play, Enshrouded, it does do the shader compilation on it’s own, in-game. The compiled shaders seem to persist between launches, reboots, etc, but not driver/game updates. So it stands to reason they are cached somewhere. As for where, not a clue.

And since if it’s the game doing the compilation, I would assume non-steam games can do it too. Why wouldn’t they?

But, ultimately, I don’t know - just saying these are my observations and assumptions based on those. :P

Overall I’m still getting used to the Steam “processing vulkan shaders” pretty much every time a game updates, but it’s worth it for the extra performance.

That can be turned off, though. Haven’t noticed much of a difference after doing so (though, I am a filthy nvidia-user). Also saving quite a bit of disk space while too.

Hastily read around in the related issue-threads and seems like on it’s own the vm.max_map_count doesn’t do much… as long as apps behave. It’s some sort of “guard rail” which prevents processes of getting too many “maps”. Still kinda unclear what these maps are and what happens is a process gets to have excessive amounts.

That said: https://access.redhat.com/solutions/99913

According to kernel-doc/Documentation/sysctl/vm.txt:

- This file contains the maximum number of memory map areas a process may have. Memory map areas are used as a side-effect of calling malloc, directly by mmap and mprotect, and also when loading shared libraries.

- While most applications need less than a thousand maps, certain programs, particularly malloc debuggers, may consume lots of them, e.g., up to one or two maps per allocation.

- The default value is 65530.

- Lowering the value can lead to problematic application behavior because the system will return out of memory errors when a process reaches the limit. The upside of lowering this limit is that it can free up lowmem for other kernel uses.

- Raising the limit may increase the memory consumption on the server. There is no immediate consumption of the memory, as this will be used only when the software requests, but it can allow a larger application footprint on the server.

So, on the risk of higher memory usage, application can go wroom-wroom? That’s my takeaway from this.

edit: ofc. I pasted the wrong link first. derrr.

edit: Suse’s documentation has some info about the effects of this setting: https://www.suse.com/support/kb/doc/?id=000016692

Haven’t been following discord development, but I did test the screen sharing some days ago on linux - just for laughs - and to my surprise it didn’t CTD the app, and it actually streamed the thingy I wanted. Great!